Quickly share a folder using HTTP on a linux host

Working on a mixed environment, with Windows and Linux boxes, you might want to share some stuff from a linux machine with a user that doesn’t have an SSH client with file tranfer support or even installed. Or even send some files to download to a non-techie team mate. Something everybody is used to is downloading files from the browser, why not use that?

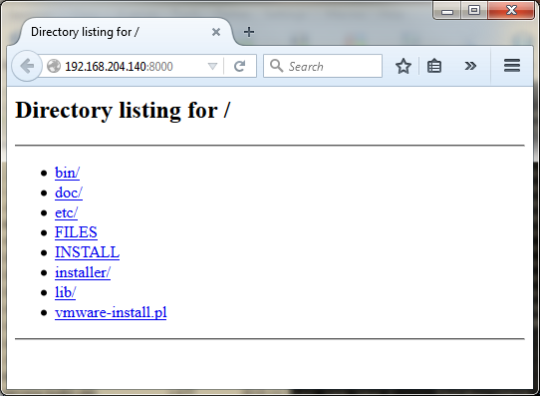

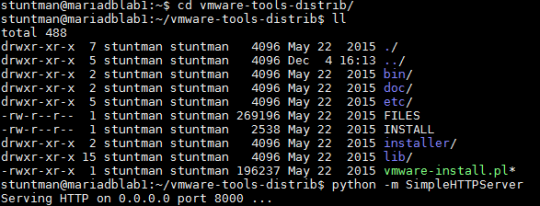

On your linux box, go to the directory where the files are stored and run the following command:

python -m SimpleHTTPServer

For example, I shared a folder called “vmware-tools-distrib”:

Afterwards, the target user only has to open the browser and navigate to the address of the linux box on port 8000.

Easy and handy.

Yahoo's MySQL Partition Manager is Open Source

The guys at Yahoo released their partition management script on github:At Yahoo, we manage a massive number of MySQL databases spread across multiple data centers.You can check out the original anoucement at the MySQL@Yahoo blog and the code at its github repo.

We have thousands of databases and each database has many partitioned tables. In order to efficiently create and maintain partitions we developed a partition manager which automatically manages these for you with minimal pre configuration.

SQL Server 2016: Always Encrypted feature

So, I was going through the documentation for SQL Server 2016 Always Encrypted feature and I read this paragraph:

The driver encrypts the data in sensitive columns before passing the data to SQL Server, and automatically rewrites queries so that the semantics to the application are preserved.

We’ll still be able to get the execution plan for the query from the server, but regarding optimization, similar issues to the ones that arise from ORM frameworks might pop-out.

Anyway, going further down the same documentation, you get the feature constraints, like:

- Queries can perform equality comparison on columns encrypted using deterministic encryption, but no other operations

- Indexing columns encrypted using randomized encryption is not supported.

- Query parameters that map to encrypted columns must be passed as driver-level parameters.

Also, you have some characteristics that disallow the use of encryption, like:

- Columns that are referenced by statistics

- Columns using alias type

- Partitioning columns

- Columns with default constraints

The case is clear that it’s a wonderful

security-wise

feature. Before adopting it on your business driven development, make sure it won’t become a source for technical debt for your current code base and future developments.

MySQL Bug #79497: Full text indexes and aggregate functions

Playing around with the Employees sample database and full text search on MySQL, I found a weird bug. After creating a full text targeting a column on a table, a "select distinct" query to retrieve the range of values returns an empty set.So if you initially perform the following query, the outcome comes with 7 rows:

mysql> select distinct title from titles;

+--------------------+

| title |

+--------------------+

| Senior Engineer |

| Staff |

| Engineer |

| Senior Staff |

| Assistant Engineer |

| Technique Leader |

| Manager |

+--------------------+

7 rows in set (0.38 sec)If you create a fulltext index on the titles table, over the title column:mysql> alter table titles add fulltext index `title` (`title`);

Query OK, 0 rows affected (14.65 sec)

Records: 0 Duplicates: 0 Warnings: 0

mysql> select distinct title from titles;So, apparently our records are gone? Repeat the query, but with COUNT:

Empty set (0.00 sec)

mysql> select count(distinct title) from titles;

+-----------------------+

| count(distinct title) |

+-----------------------+

| 7 |

+-----------------------+

1 row in set (0.24 sec)

The records are not gone, they justo won't appear with the DISTINCT aggregate function. If you drop the FT index, the query returns the correct result set.If dropping the index is not an option, you can workaround this bug, repeating the query with UCASE:

mysql> select distinct(ucase(title)) from titles;

+--------------------+

| (ucase(title)) |

+--------------------+

| SENIOR ENGINEER |

| STAFF |

| ENGINEER |

| SENIOR STAFF |

| ASSISTANT ENGINEER |

| TECHNIQUE LEADER |

| MANAGER |

+--------------------+

7 rows in set (0.44 sec)

This bug is already reported at MySQL Bugs. It's #79497. It appears to be an upstream issue, reproducible on current 5.6 and 5.7.MySQL Bug #79497: Full text indexes and aggregate functions

Link: MySQL Bug #79497: Full text indexes and aggregate functionsThis bug is already reported at MySQL Bugs. It appears to be an upstream issue, reproducible on current 5.6 and 5.7.

Should we be muddying the relational waters? Use cases for MySQL & Mongodb | Scalable Startups

Link: Should we be muddying the relational waters? Use cases for MySQL & Mongodb | Scalable StartupsSeems I’m not the only one keeping away from JSON on the relational DB:

I would shy away from the NoSQL add-ons that some relational vendors have added, to compete with their newer database cousins. This starts to feel like a fashion contest after a while.

You can also get my point of view on this matter:

Get a summary footprint on a MySQL server instance

Landing on an enterprise with ongoing projects mean that servers are often handed to IT staff without complete knowledge of what's inside.I've built a simple script, scraping from here and there, to gather a summary of relevant information.

Once you've gained remote access to the MySQL instance, you can execute the queries to identify the following information regarding the target database server:

- The host name, what operating system it runs on, the MySQL version installed, default collation of the instance, the installation directory and the data directory;

- How many user databases it hosts, what they're called and the collation used;

- The size of each database as a whole and broken down by storage engine;

- All the tables and their space used ;

- The creation and last modification on each database.

Get a summary footprint on a MySQL server instance

Link: Get a summary footprint on a MySQL server instanceLanding on an enterprise with ongoing projects mean that servers are often handed to IT staff without complete knowledge of what’s inside.

I’ve built a simple script, scraping from here and there, to gather a summary of relevant information.

JSON on MySQL: still not fond of it.

Earlier, I posted here about my dislike of allowing developers to push the JSON workload into the database server.

Reading this entry on a nice blog about MySQL, what caught my eye was the sentence:

Funny. It all started with the idea to be schema-free. Now people seem to need schema enforcement.

I’ve also seen this article on DZone which sarcastically illustrates the mislead of developers caused by the hype on JSON:

That’s it, then; let’s use JSON and manifest the death of XML!

I got reassurance on Twitter that MySQL performance won’t be compromised for using the new JSON datatype and operations:

@mjlmo I would like to see it be inc. in the standards (where it is currently being discussed). Overhead is well amortized by IO on insert.

— morgo (@morgo) September 10, 2015Not wanting to flog a dead horse here, by coming back to this topic, but it’s really not about the features or how they perform well on the engine. It’s what developers come up with to make things work.

Apparently, already there are bright minds that would like to cripple the flexibility of JSON with a sort of schema validation. Who knows what else will come up? And since the door is open to shove all that bright ideas inside the database engine, might as well make developers at home and put it up to them to solve the upcoming performance issues later on.

Configuring and testing MySQL binary log

The binary log contains “events” that describe database changes. On a basic installation with default options, it's not turned on. This log is essential for accommodating the possible following requirements:- Replication: the binary log on a master replication server provides a record of the data changes to be sent to slave servers.

- Point in Time recovery: allow to recover a database from a full backup and them replaying the subsequent events saved on the binary log, up to a given instant.

#Enabling the binary log

log-bin=binlog

max_binlog_size=500M

expire_logs_days=7

server_id=1- Binary log is turned on and every file name will be 'binlog' and a sequential number as the extension;

- The maximum file size for each log will be 500 megabytes;

- The binary log files expire and can be purged after 7 days;

- Our server has 1 as the identification number (this serves replication purposes but is always required).

shell> sudo service mysql restart

mysql> show binary logs;

+---------------+-----------+

| Log_name | File_size |

+---------------+-----------+

| binlog.000001 | 177 |

| binlog.000002 | 315 |

+---------------+-----------+

2 rows in set (0.00 sec)

- sakila-schema.sql: creates the schema and structure for the sakila database;

- sakila-data.sql: loads the data into the tables on the sakila database.

shell> mysql -u root -p < sakila-schema.sql

mysql> flush binary logs;

Query OK, 0 rows affected (0.01 sec)

mysql> show binary logs;

+---------------+-----------+

| Log_name | File_size |

+---------------+-----------+

| binlog.000001 | 177 |

| binlog.000002 | 359 |

| binlog.000003 | 154 |

+---------------+-----------+

3 rows in set (0.00 sec)shell> mysql -u root -p < sakila-data.sql

mysql> show binary logs;

+---------------+-----------+

| Log_name | File_size |

+---------------+-----------+

| binlog.000001 | 177 |

| binlog.000002 | 359 |

| binlog.000003 | 1359101 |

+---------------+-----------+

3 rows in set (0.01 sec)

shell> sudo mysqlbinlog --verbose /var/lib/mysql/binlog.000003Using the verbose option, the following commented output is produced from our log, one per each statement:

### INSERT INTO `sakila`.`country`

### SET

### @1=1

### @2='Afghanistan'

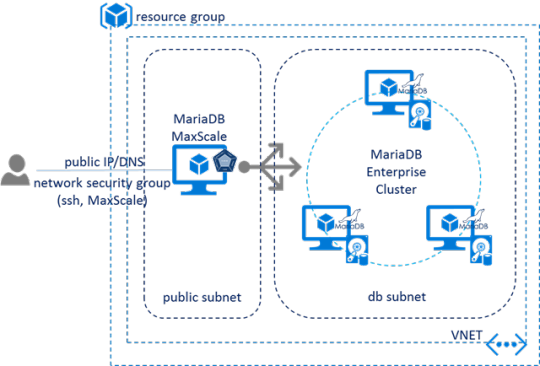

### @3=1139978640MariaDB Enterprise Cluster in Microsoft Azure Marketplace

Link: MariaDB Enterprise Cluster in Microsoft Azure Marketplace

MariaDB Enterprise Cluster with Galera technology is a multi-master cluster that achieves high availability and scalability through features such as synchronous replication, read and write to any cluster nodes without slave lag, automatic membership control of new nodes joining the cluster and failed nodes dropping from the cluster.

This is why you're not better off with a commercial database

When tackling a new enterprise project to support a given business, you face the challenge of choosing and committing to a database platform. The choice should be the one most adequate, given the needs and requirements of the new information system and data to be hosted and managed.Typically, a number of factors should be taken into consideration like security features, storage requirements, reliability, high availability, backups, disaster recovery, data compression, technical support and last but definitely not least, the cost of the solution. Added to that there is also performance, scalability and ease of administration to think about.

With the result of this analysis, most of the time, the verdict is this: data platforms available as community editions or free open source fall short on the given requirements fulfillment. So, the advice is almost always to acquire commercial licenses or expand the licensing already owned.

And this should give you peace of mind for a while. At least until the first release of the system goes live. After that, some of the common pitfalls are:

- Security permissions were not exhaustively identified for all database objects. To solve things quickly, you turn your database authorization management into Swiss cheese;

- The new system has issues, until the bugs are fixed, manual correction scripts have to be executed on working hours, maiming overall business activity;

- As the data volume grows, there is performance degradation due to inefficient indexing, bad user experience design or poor database coding skills;

- Technical support provided by the database vendor performs an audit on the workload, does some tuning on the server instance, and shifts responsibility on the remaining lack of performance over to the development team;

- The development team struggles adopting the database vendor recommendations as it has great impact on the source code;

- Management wants high availability, but it won't commit the infrastructure resources and budget to set it up properly;

- You do not have a remote site so that a disaster recovery plan can be made, you don't have a lab where you regularly restore backups and perform automated integrity checks;

- You are understaffed and with no one possessing deep skills on the specific data platform you own;

Even if just a third of these pitfalls sound familiar, what are you doing with your next project? Still thinking on recommending commercial software because people are the ones to blame here?

On a global organization, after you deploy the first release and spread it across the offices, the licensing and support costs will skyrocket. That money could be spent preventing some of the pitfalls mentioned here. If you cut back on licensing and support, you can spend on infrastructure and staff.

There are wonderful commercial databases out there, but on the business requirements phase, the pick should be done as a whole and not based on vendor promises because the final solution will be a result of development and available budget, not a sales brochure.

Vision and engineering are the keys to success. And I'm afraid that doesn't come out of the box.

SQL Server Row Level Security | Official Pythian Blog

Link: SQL Server Row Level Security | Official Pythian BlogSo I’ve read the article above and thought:

- Will managers realize they have to document a formal data security policy?

- Will DBA staff be accountable for wrong permissions: granting too much or too little access to data?

Overall, I think it’s definitely a good security enhancement but security lies on the weakest link, right?

Oracle Announces General Availability of MySQL 5.7

Oracle today announced the general availability of MySQL 5.7, the latest version of the world’s most popular open source database. The new version delivers greater performance, scalability and manageability, plus enhanced NoSQL capabilities with JSON support and MySQL Router, which makes it easy to connect applications to multiple MySQL databases.Read the press release at: https://www.oracle.com/corporate/pressrelease/mysql-5-7-ga-101915.html

Learn to stop using shiny new things and love MySQL

A nice post on the Pinterest engineering blog:https://engineering.pinterest.com/blog/learn-stop-using-shiny-new-things-and-love-mysql

My favorite advice from there: "Keep it simple. No matter what technology you’re using, it will fail."

Happy reading.

This is why you're not better off with a commercial database

When tackling a new enterprise project to support a given business, you face the challenge of choosing and committing to a database platform. The choice should be the one most adequate, given the needs and requirements of the new information system and data to be hosted and managed.

Typically, a number of factors should be taken into consideration like security features, storage requirements, reliability, high availability, backups, disaster recovery, data compression, technical support and last but definitely not least, the cost of the solution. Added to that there is also performance, scalability and ease of administration to think about.

With the result of this analysis, most of the time, the verdict is this: data platforms available as community editions or free open source fall short on the given requirements fulfillment. So, the advice is almost always to acquire commercial licenses or expand the licensing already owned.

And this should give you peace of mind for a while. At least until the first release of the system goes live. After that, some of the common pitfalls are:

- Security permissions were not exhaustively identified for all database objects. To solve things quickly, you turn your database authorization management into Swiss cheese;

- The new system has issues, until the bugs are fixed, manual correction scripts have to be executed on working hours, maiming overall business activity;

- As the data volume grows, there is performance degradation due to inefficient indexing, bad user experience design or poor database coding skills;

- Technical support provided by the database vendor performs an audit on the workload, does some tuning on the server instance, and shifts responsibility on the remaining lack of performance over to the development team;

- The development team struggles adopting the database vendor recommendations as it has great impact on the source code;

- Management wants high availability, but it won’t commit the infrastructure resources and budget to set it up properly;

- You do not have a remote site so that a disaster recovery plan can be made, you don’t have a lab where you regularly restore backups and perform automated integrity checks;

- You are understaffed and with no one possessing deep skills on the specific data platform you own;

Even if just a third of these pitfalls sound familiar, what are you doing with your next project? Still thinking on recommending commercial software because people are the ones to blame here?

On a global organization, after you deploy the first release and spread it across the offices, the licensing and support costs will skyrocket. That money could be spent preventing some of the pitfalls mentioned here. If you cut back on licensing and support, you can spend on infrastructure and staff.

There are wonderful commercial databases out there, but on the business requirements phase, the pick should be done as a whole and not based on vendor promises because the final solution will be a result of development and available budget, not a sales brochure.

Vision and engineering are the keys to success. And I’m afraid that doesn’t come out of the box.

Photo Credit: tec_estromberg via Compfight cc

MSSQL Database Engine Security Checklist

So this is a really nice shortlist for any DBA. Without being too detailed, it gives a good starting point to perform a security audit on your Microsoft SQL Server database instances.

The aspects covered are:

- Physical Security

- Operating System Configuration

- Database Instance Configurations

At the end it has some links to other related checklists. It’s on the Technet wiki and should be updated regularly.

Check it out here: http://social.technet.microsoft.com/wiki/contents/articles/1256.database-engine-security-checklist-database-engine-security-configuration.aspx

Developing a MySQL Workbench plugin

The MySQL Workbench tool is great for development and administration tasks. Also it's available on Windows, Linux and Mac OS X which, according to information from third party sources, is more than you can say for most of the other equivalent tools. And Workbench is free.Having said that, most of the provided functionalities are intuitive and of daily use for developer and DBA staff alike. Moving beyond this rich out-of-the-box features set, Workbench empowers it's users to extend and develop their own custom features. For this purpose, we use both of the following:

- GRT: Generic RunTime is the internal system used by Workbench to hold model document data. It is also the mechanism by which Workbench can interact with Modules and Plugins.

- MForms: MForms is a small GUI toolkit library written for use in the MySQL Workbench project.

I've developed a small proof of concept to learn how to code a custom plugin. The purpose is to provide a simple refactoring feature for the script on the SQL editor (basically it's a find and replace):

To briefly explain the code, first we import all of the workbench (wb) modules, grt and mforms:

# import the wb moduleThen we declare the module information, its name, author and version:

from wb import *

# import the grt module

import grt

# import the mforms module for GUI stuff

import mforms

# define this Python module as a GRT module

ModuleInfo = DefineModule(name="Refactor", author="mjlmo", version="0.1")

@ModuleInfo.plugin("wb.text.refactor", caption = "Refactor Selection", input=[wbinputs.currentQueryBuffer()], pluginMenu= "SQL/Utilities")Finally, we insert the code to perform tha task in hand, in this case replace a selected text on the SQL editor with a given one on an input box. If there is no text selected, a message box will appear on screen stating that. Otherwise, we proceed with the refactoring operation.

@ModuleInfo.export(grt.INT, grt.classes.db_query_QueryBuffer)

To install this plugin, simply open MySQL Workbench, choose the "Scripting > Install Plugin/Module ..." option, browse to the "refactor_grt.py" file location and open it. Close and re-open the tool. There should be a new option on the "Tools > Utilities" menu called "Refactor Selection". Also, the plugin appear on the plugin manager:

You can read another full example regarding MySQL Workbench on how to define a module and define a plugin in Python here:

http://mysqlworkbench.org/workbench/doc/

MySQL - basic network security

Having secured our MySQL server and created a personal account to allow for remote administration, we can take one step further on blocking unwanted access to our database server. For this example, we'll continue to use the Ubuntu 14.04.2 LTS Server installed and configured on the previous posts.After booting up the server, running a network check for connections, lists our server listening on ports 22 and 3306 (mysql):

We also used the Uncomplicated Firewall, a frontend for iptables, to check if the server's firewall is enabled. It's inactive and consequently all listening ports are available to the network.

Let's adopt the following policy:

- SSH port is open to the network and available for sysadmin staff. Access control will be implemented on dedicated network firewalls;

- MySQL port is closed and has to be authorized on demand, on a client machine adress basis.

sudo ufw allow from 192.168.204.XXX to 192.168.204.132 port 3306

stuntman@MuSQL-Lab:~$ netstat -at

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 *:ssh *:* LISTEN

tcp 0 0 192.168.204.132:mysql *:* LISTEN

tcp 0 0 192.168.204.132:mysql 192.168.204.X:54617 ESTABLISHED

tcp 0 0 192.168.204.132:mysql 192.168.204.X:54618 ESTABLISHED

tcp 0 64 192.168.204.132:ssh 192.168.204.X:54483 ESTABLISHED

tcp6 0 0 [::]:ssh [::]:* LISTEN

stuntman@MuSQL-Lab:~$stuntman@MuSQL-Lab:~$ sudo ufw status

Status: active

To Action From

-- ------ ----

22/tcp ALLOW Anywhere

192.168.204.132 3306 ALLOW 192.168.204.X

22/tcp (v6) ALLOW Anywhere (v6)jqBootstrap ignores required field custom error message

jqBootstrap is a JQuery validation framework for bootstrap forms. While using it to validate required fields, on the form submission I got a tooltip with a “required field” message but not the custom I had put on the markup.

In short, “required” is part of the HTML5 standard and it does what it’s supposed to, validates the input on a given field.

Said that and given that the “sniffHtml” setting didn’t do the trick, I got things done with two control events that customize the HTML5 behaviour:

<input id=“myHtmlControl” name=“myHtmlControl” type=“text” required oninvalid=“this.setCustomValidity(‘You really should write on this field.’)” onchange=“this.setCustomValidity(”)“>

Hope this saves someone time :)

SQL Server 2016 Installation Changes

The installation assistant of Microsoft SQL Server database had a major leap in customization features since the 2008 versions.

Again, on the yet to be released SQL Server 2016, the installation process offers the following new possibilities:

PolyBase allows you to use T-SQL statements to access data stored in Hadoop or Azure Blob Storage and query it in an adhoc fashion. However, installing Oracle Java Runtime Environment will be a requirement.

Concerning the TempDB, by default, setup will add as many tempdb files as the CPU count or 8, whichever is lower.

Care to try it out? Dowload it at:

https://www.microsoft.com/en-us/evalcenter/evaluate-sql-server-2016/

MySQL sample databases: for testing and training

Sometimes it's useful to have a set of data prepared to be used on a fresh MySQL install for testing purposes. Or you might be preparing some training or workshop and want to prepare examples with fake information. For MySQL there are some choices available:- Employees Sample Database: provides a combination of a large base of data (approximately 160MB) spread over six separate tables and consisting of 4 million records in total.

- Sakila Sample Database: is intended to provide a standard schema that can be used for examples in books, tutorials, articles, samples, and so forth. Sakila sample database also serves to highlight the latest features of MySQL such as Views, Stored Procedures, and Triggers.

- BIRT Sample Database: The schema is for Classic Models, a retailer of scale models of classic cars. The database contains typical business data such as customers, orders, order line items, products and so on.

JSON on the database? No thanks!

JSON is a wonderful thing for developers. It’s becoming more and more the de facto standard for information interchange and asynchronous operations inside applications. So much so that database engines are integrating it on their latest release. Examples of that are:

I wont even challenge the advantages and performance measurements on such primitives, but both from a database administrator perspective, as well as a developer’s one, I think this is a bad idea!

The major pitfalls on using this native integration would be:

- Injecting database vendor dependency on the application, since these enhancements work on specific primitives not part of SQL;

- Processing expense and memory allocation is shifted on to the database server, whose main role should be serving data not formatting or performing interpretations from it.

So you might end up with a great application that uses the most recent features from your specific RDBMS but from then on you are stuck with it and hope you don’t forget to perform the server sizing including the JSON processing load.

Still on Azure security...

Tired of so much skepticism on hybrid cloud security when compared to private infrastructures, let me ask how many of your corporate data centers, besides the usual VPN linked to the office, have MFA?

Watch and learn:

https://channel9.msdn.com/Blogs/Windows-Azure/WA-MFA-Overview?ocid=player

Save up to 50% on all books & eBooks - Microsoft Press

Discount code MOREPRESS confers a 35% discount off the list price of one product or 50% off the list price of two or more products purchased on microsoftpressstore.com in a single transaction.

Check it out here:

Discount offer expires September 9, 2015.

Print tab separated values as table using MySQL

Using mysql command line utility to get recordsets, the data rows alignment and line breaks are often a mess. You can use the command line tool on a mysql database server to get a set of rows into an output TSV file like so:shell> mysql -u your_user -p < your_statement.sql > data.csvI came up with the following python script to grab the output file and pretty print:You can put your own filename instead of 'data.csv'. And of course, this script also works for other TSV files that don't come from mysql. To run the script you should install the python tabulate package:

https://pypi.python.org/pypi/tabulate

Guide to protecting your virtual infrastructure and cloud-based applications on Azure

For those still aprehensive about how the cloud can preserve your data center security mechanisms, this is a must read:

Microsoft Azure Network Security

Learn about the numerous security controls available in Azure, and get guidelines for protecting Azure virtual machines and Azure cloud services in this new white paper.

What have we learnt in two decades of MySQL?

Article on Information Age:From obscurity to the mainstream, the journey of MySQL shows the power of the open source community to drive innovation.Read the full article here:

http://goo.gl/bqFZPb

mysqlpump — A Database Backup Program

The MySQL 5.7 Release Notes for version 5.7.8 are out. Besides the new JSON data type, there is also a new tool, called mysqlpump, which offers the following features:- Parallel processing of databases, and of objects within databases, to speed up the dump process

- Better control over which databases and database objects (tables, views, stored programs, user accounts) to dump

- Dumping of user accounts as account-management statements (CREATE USER, GRANT) rather than as inserts into the mysql system database

- Capability of creating compressed output

- Progress indicator

- For dump file reloading, faster secondary index creation for InnoDB tables by adding indexes after rows are inserted

... mysqlpump is not currently consistent. That is to say that currently each of the dump threads lack a synchronization point before they start backing up the data. This makes it currently unsafe as a general purpose backup replacement.Happy testing!

New feature: Powershell Resource Gallery

This is a new feature already becoming available in Windows Management Framework (WMF) 5.0 Preview. The PowerShell Gallery is the central repository for Windows PowerShell content. You can find new Windows PowerShell commands or Desired State Configuration (DSC) resources.

To get started with the gallery, it requires the latest version of the PowerShellGet module, available in Windows Management Framework (WMF) 5.0 Preview.

With the latest WMF Preview, you can:

- Search through modules in the Gallery with Find-Module

- Install modules from the Gallery with Install-Module

- Update your modules to the latest version with Update-Module

- Add your own custom repository with Register-PSRepository

Check out the Getting Started page for more information on how to use PowerShellGet commands with the Gallery.

To just browse the exisiting packages you can simply visit the gallery.

Publishing to the Gallery is limited to an invitation only audience during the preview period.

Creating user accounts on a secured MySQL server

After installing a MySQL database server and securing that installation with the mysql_secure_installation tool, you are locked out from remote access to perform any operation on the server. Since we all like the 'R' in RDBMS to stand for remote as well as relational, let's see how we can configure user credentials to provide remote access to the database server but still keep those credentials from providing local access.Suppose you have a key user named James Bond to whom you want to give remote administration on the MySQL server. However, you don't want him to logon locally on the machine to perform any operations. This way you achieve three levels of security:

- The credential only allows remote access;

- You control from which remote computers the user can connect, by configuring rules on a network or server firewall;

- IF it's a shared server, console access on the machine doesn't give access to the database engine.

shell> mysql -u root -pNow, for the sake of this example, let's create a user account for James Bond and give him full privileges:mysql> CREATE USER 'jamesbond'@'%' IDENTIFIED BY 'double0seven';Having set this up, if James Bond tries to login from any remote computer he can because we used the wildcard '%' to specify the user's machine. But he can also login from the local server. To prevent this from happening, we create a second account with the same username and password, but do not grant any permissions:

mysql> GRANT ALL PRIVILEGES ON *.* TO 'jamesbond'@'%' WITH GRANT OPTION;

mysql> CREATE USER 'jamesbond'@'localhost' IDENTIFIED BY 'double0seven';If the user then tries to login again from the local machine, he can. But isn't able to perform any operations or queries.Securing your MySQL server

After installing a MySQL database server, like the one I posted earlier, if it's going to be a production environment than you should consider securing the instance by eliminating some of the basic vulnerabilities that come with a generic install.Fortunately MySQL and MariaDB already come with a tool for that purpose, called mysql_secure_installation. This program enables to perform the following improvements to the security of your installation:

- set a password for root accounts.

- remove root accounts that are accessible from outside the local host.

- remove anonymous-user accounts.

- remove the test database (if exists), which by default can be accessed by anonymous users.

Be advised that as of MySQL 5.7.2, this tool is an executable binary available on all platforms. Before version 5.7.2, it was a script available only for Unix and Unix-like systems.

Invoking the tool without any arguments:

shell> mysql_secure_installationThe script will prompt you to determine which actions to perform:NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MySQL

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MySQL to secure it, we'll need the current

password for the root user. If you've just installed MySQL, and

you haven't set the root password yet, the password will be blank,

so you should just press enter here.

Enter current password for root (enter for none):

OK, successfully used password, moving on...

Setting the root password ensures that nobody can log into the MySQL

root user without the proper authorisation.

You already have a root password set, so you can safely answer 'n'.

Change the root password? [Y/n] n

... skipping.

By default, a MySQL installation has an anonymous user, allowing anyone

to log into MySQL without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n] y

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n] y

... Success!

By default, MySQL comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n] y

- Dropping test database...

ERROR 1008 (HY000) at line 1: Can't drop database 'test'; database doesn't exist

... Failed! Not critical, keep moving...

- Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n] y

... Success!

Cleaning up...

All done! If you've completed all of the above steps, your MySQL

installation should now be secure.

Thanks for using MySQL!Basic MySQL installation on Ubuntu Server

Cenario

Installation Process

sudo apt-get install mysql-serversudo netstat -tap | grep mysqlsudo service mysql restartPost Install Validation

shell> mysql --user=user_name --password=your_password db_nameFor the list of all MySQL commands type 'help'. To exit, type 'quit'.

Installation path analysis

- /usr/bin/ - binary files for the server and command line utilities

- /usr/lib/ - libraries

- /usr/share/man/ - manual pages

- /usr/share/mysql/ - helper scripts for installation and language support

- /var/lib/mysql/ - data files

- /usr/lib/mysql/plugin/ - plugins installed

- /etc/mysql/ - configuration files

Visual Studio 2015 Final Release Event

Link: Visual Studio 2015 Final Release EventWatch the live event or download it now:

https://www.visualstudio.com/downloads/download-visual-studio-vs

JQuery getJSON fails on IE

On a current project, I found that the ajax calls scripted using jquery’s getJSON were failing with no error message and didn’t even get to send the http request.

Doing some searches on the Internet, I found all sorts of mumbo jambo, about previous versiosn of IE not supporting xmlHTTPRequest, possible hacks to the code and turning the getJSON use into the equivalent ajax request.

Turns out, our test site was being fitted into the Intranet Security Zone and there’s an option, that usually gets turned on, where you can set IE to use the compatibility view for every intranet site:

Although useful for legacy software on corporate environments, it’s killing modern web development.

I’m really looking forward to living on the Edge:

“we encourage site developers to update their sites now to work well in Edge mode and serve the same markup they use with other browsers”

'It's a graveyard': The software devs leaving Greece for good | ZDNet

Link: 'It's a graveyard': The software devs leaving Greece for good | ZDNetWith the current globalization of services, is imigration still the way to go? Can’t developers land a a remote job and work from their homes?

Maybe we’re all ready to be customers for shopping and services that are in “the clouds”, but not for staffing projects.

when will we put people up there too?

Gmail Adds “Undo Send” Feature

Link: Gmail Adds “Undo Send” FeatureThis is a must have. Incredible how it’s been so long overdue!

Every mail service should have this feature.

#Powershell and SQL Server: Using the SQLPS Provider | Art of the DBA

Link: #Powershell and SQL Server: Using the SQLPS Provider | Art of the DBAA quick and easy read to get a feel of managing a SQL Server instance using powershell.

Security Concern: Alternative to extended stored procedures on Microsoft SQL Server

While securing a Microsoft SQL Server instance, there are many issues you should look after. According to the official documentation,

it can be viewed as a series of steps, involving four areas: the

platform, authentication, objects (including data), and applications

that access the system. On environments where SQL Server is used on a

more “Swiss Army Knife” fashion, there is often difficulty on disabling

or, at least, restricting access to the general extended stored procedures, like xp_cmdshell.

These stored procedures provide an interface from an instance of SQL

Server to external programs for various maintenance activities. But they

also represent a security liability. The alternative suggested is if

possible to use SQL CLR Integration

to perform these tasks. It can be done and it works, but maybe it isn’t

a desired task by the regular DBA to learn to program a .NET Framework

language.

With this in mind, as far as SQL Server Agent jobs are concerned, I’d suggest creating a Powershell job step.

Bear in mind this requires a specific kind of error handling. By default the ErrorActionPreference

is set to Continue, and this has implications on how errors bubble up

to the SQL Server Job Server. If you run a Windows PowerShell command as

a SQL Server Agent job and there are no syntax errors but the command

produces an error, the SQL Server Agent job will report success. If you

want an error condition to halt execution of a SQL Server Agent job or

to produce an error, you’ll need to add some error handling. To bubble

up Windows PowerShell errors to SQL Server Agent, you’ll need to set

your $ErrorActionPreference = “Stop”. You can check that out here.

For the processing task which are a hybrid of data manipulation and

other external system related tasks I’d suggest the development of

Powershell scripts that use SQL Server Management Objects (SMO) which is a collection of objects that are designed for programming all aspects of managing Microsoft SQL Server.

Sure, you might say that this suggestion doesn’t help from learning to

code, but this is an inevitable goal every SQL Server DBA must have as

the future might have projects where the projects might have a server core platform. What then?

So why not kill two rabbits with one stone and learn your Powershell and SMO, it quite seems the way to go. For starters, this might help.

On the same subject as this post, this editorial from SQLServer Central came out.

Happy coding!

Get a free Office 365 developer account

Microsoft is giving away a one year FREE subscription for an Office 365 Development

instance. Sign up and you’ll receive the link via email to setup

your subscription.

The Office 365 Developer Program is a vibrant and growing community of

developers, veteran and newbie, who are developing solutions on Office

365.

You can go to the sign up form from this page.

Issues with SQL Server 2014 Service Pack 1

Microsoft chose to remove SQL Server 2014 Service Pack 1 (SP1) from the Microsoft

Download Center due to a significant installation issue discovered

shortly after release.

When applying the patch, any SQL Server instance with SSISDB catalog enabled runs into an installation error during SQL Server 2014 SP1 upgrade. The

instance is then in an invalid and unusable state.

- In the 11 hours of availability, there were 270 downloads from the Download Center. No other distribution channels were affected.

- The original release was version 12.0.4050.0, the new release will have a new KB and build number.

- When SP1 is released again, Microsoft will recommend that 12.0.4050.0 is uninstalled prior to applying the new SP1 update. This includes any “Side by Side” instance scenarios.

- Microsoft is targeting release in a few weeks and will share updates as we go.

You can follow the updates on the official release page. If you have installed the update, there are also steps for a workaround.

For the Linux/Mac fans that find Windows less secure

Don’t blame the OS, blame the users. Reading this news about how Russian cyber attackers used security flaws to hack diplomatic targets in the US, I was particularly fond of the statement:

The Microsoft problem by itself is less dangerous, since it

involves enhanced powers on a computer from those of an ordinary user.

This is really about all those IT expert wannabes that make the machines go faster by disabling all security enhancements, such as the User Access Control.

Imagine a Linux machine with no password for sudo/su commands. Or a Mac that doesn’t prompt you for the password when something is trying to change the system.

That’s pretty much the same.

Article: SQL Server 2012 SP2 CU5 Available | Glenn Berry

Link: Article: SQL Server 2012 SP2 CU5 Available | Glenn BerrySQL Server 2012 SP2 CU5 Available | Glenn Berry

Free ebooks from Packt Publishing

The guys at Packt Publishing are giving away ebooks. It’s one book per day until March 5th.

Check them out daily at:

Article: Free ebook: Microsoft Azure Essentials Fundamentals of Azure

Link: Article: Free ebook: Microsoft Azure Essentials Fundamentals of AzureFree ebook: Microsoft Azure Essentials Fundamentals of Azure

Microsoft mainstreams business intelligence with new and improved Power BI Preview - The Official Microsoft Blog

Link: Microsoft mainstreams business intelligence with new and improved Power BI Preview - The Official Microsoft BlogToday is an incredibly exciting day as we unveil the new Power BI – a service we believe will fundamentally transform the “business of business intelligence.” Power BI can help every company adopt a data culture with easy-to-use, accessible tools and innovative technology that lowers the barrier to entry, for all. For those not familiar with Power BI, it is a cloud-based business analytics service (software-as-a -service) for non-technical business … Read more »

Pluralsight Starter Subscription for Microsoft TechNet

Link: Pluralsight Starter Subscription for Microsoft TechNetFREE Pluralsight Starter subscription that includes 13 hand-selected courses. This offer is only available through April 30, 2015.

.NET Core is on Github

Link: .NET Core is on GithubCoreCLR is the .NET execution engine in .NET Core, performing functions such as garbage collection and compilation to machine code. .NET Core is a modular implementation of .NET that can be used as the base stack for a wide variety of scenarios, today scaling from console utilities to web apps in the cloud.

For those of you who missed what .NET Core is all about, this is a must read:

http://blogs.msdn.com/b/dotnet/archive/2014/12/04/introducing-net-core.aspx

USING TEMPLATES WITH SQL SERVER MANAGEMENT STUDIO

Link: USING TEMPLATES WITH SQL SERVER MANAGEMENT STUDIOFull of awesome indeed!

Picture: How to take a screenshot in 1983: http://t.co/kYg5bX4SF4

Link: Picture: How to take a screenshot in 1983: http://t.co/kYg5bX4SF4How to take a screenshot in 1983:

Why dynamic TSQL should be banned from Stored Procedures

The guys from SQLServerCentral.com published an article giving a Quick Tour of sp_ExecuteSQL. This is all very well, except for the antibodies in me regarding dynamic TSQL inside frequently used stored procedures.

Yes, it’s true that “Every once in a while you find yourself working with SQL Server and you need to execute a piece of T-SQL that will be different at the time of execution from the time that the code is being written.” but pointing out SQL injection as the single concern is quite an understatement.

The key downside events regarding dynamic TSQL are statement compile time and execution plans. You can read on the official documentation at MSDN: “The Transact-SQL statement or batch in the sp_executesql @stmt parameter is not compiled until the sp_executesql statement is executed. The contents of @stmt are then compiled and executed as an execution plan separate from the execution plan of the batch that called sp_executesql.”

Furthermore “sp_executesql can be used instead of stored procedures to execute a Transact-SQL statement many times when the change in parameter values to the statement is the only variation. Because the Transact-SQL statement itself remains constant and only the parameter values change, the SQL Server query optimizer is likely to reuse the execution plan it generates for the first execution.”

One can try to optimize this dynamic execution by using parameter substitution, so the the Transact-SQL string is built only one time. But there are still aspects you can’t control:

- What is the generated execution plan for the dynamic statement, running separate from the execution plan of the batch that called sp_executesql;

- Is the execution plan any good? You can’t really tell to try to change it;

- Good or bad, the plan is cached for the following executions. If the diversity of queries that can dynamically be run is large, there’s no gain from the plan caching;

- Using the RECOMPILE option solves the above issue but creates another: each time the stored procedure is called, the execution plan is recompiled. For long running queries this might be overlooked, but for queries otherwise fast, it’s a major performance drawback.

Having stated my displeasure for the dynamic TSQL, I can also recommend the following readings:

In all applications I witnessed the development, using dynamic TSQL inside stored procedures caused nothing but trouble as soon as the tiny tables turned into a larger, business critical database with hundreds of users.

No comments :

Post a Comment